Big Oh, Big Omega, Big Theta: What is asymptotic notation?

Subscribe to my newsletter to hear about my latest posts. No spam, I promise.

Computer Scientists use asymptotic notation is to bind algorithmic complexity expressed as a function of its input size. Instead of focussing on a function’s exact definition (e.g.,

Where the previous part of this series focused on the intuition behind algorithm complexity using the example of a party’s organisation, this article will focus on rigorously analysing the run time of an algorithm using asymptotic notation. It will uncover the meaning of characters like

The first step of rigorous analysis is to express the runtime of an algorithm as a function of

Quick Exercise

- What would be the time complexity of this last greeting function if

- More generally, what if an arbitrary ratio

- What if

Expand to view answers

Answers:

a)

b)

c)

Now that the algorithm runtime is represented as a function of its input, we can start binding its run time as its input size grows toward infinity. This is where Big O comes in, along with our first Big Formula Warning.

Mathematical shortcut

Are you only interested in quickly finding the

To understand why this works and the meaning of the characters

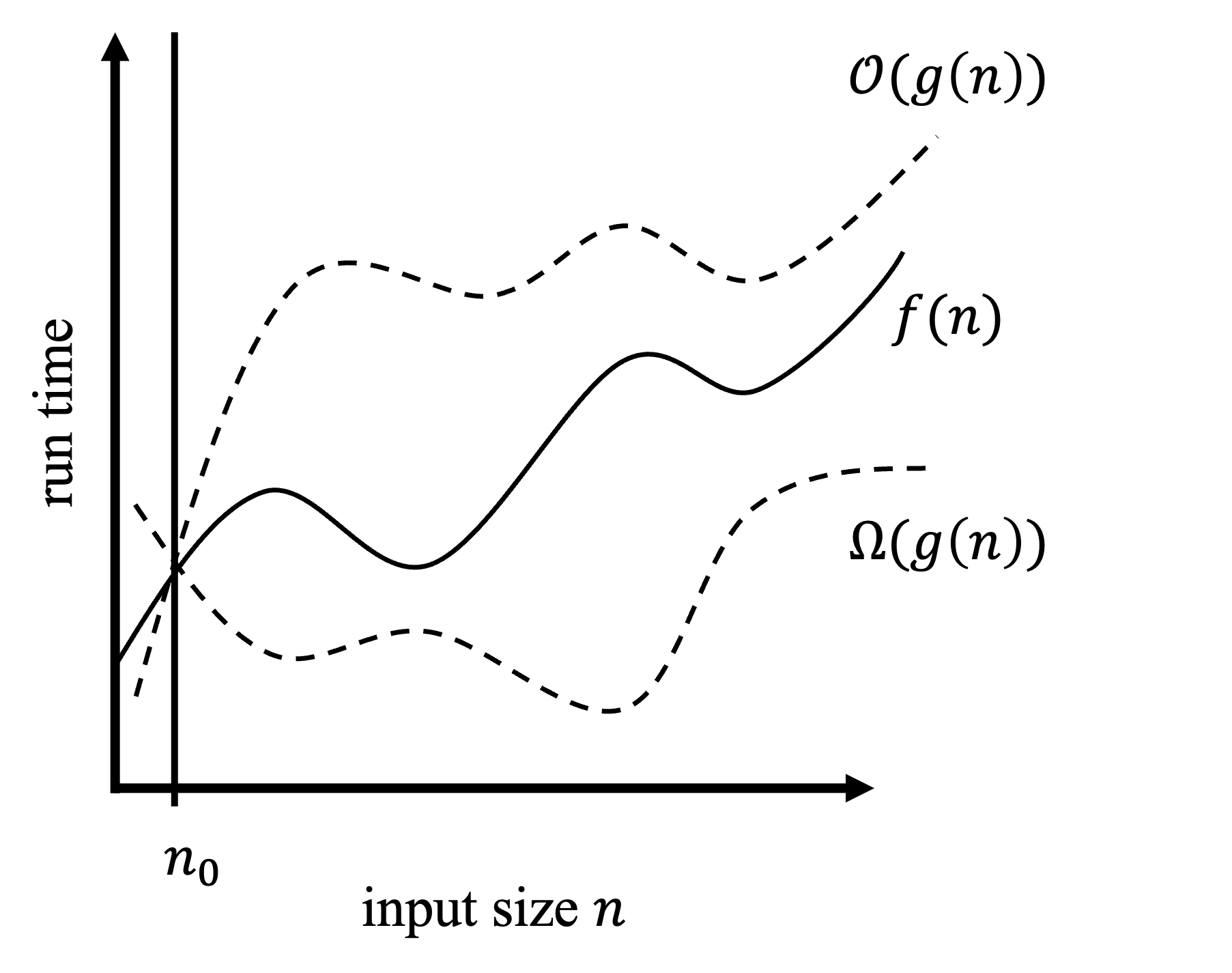

Binding functions with

A function

Let’s reword this in plain English.

In English, the statement

Let’s imagine that a party’s organisation only requires setting up the house, greeting all guests and cleaning up. Let’s also assume that setting up and cleaning up take

What would be

Now, what is the asymptotic upper-bound of this expression?

An easy mathematical shortcut there is to take the fastest growing term (here

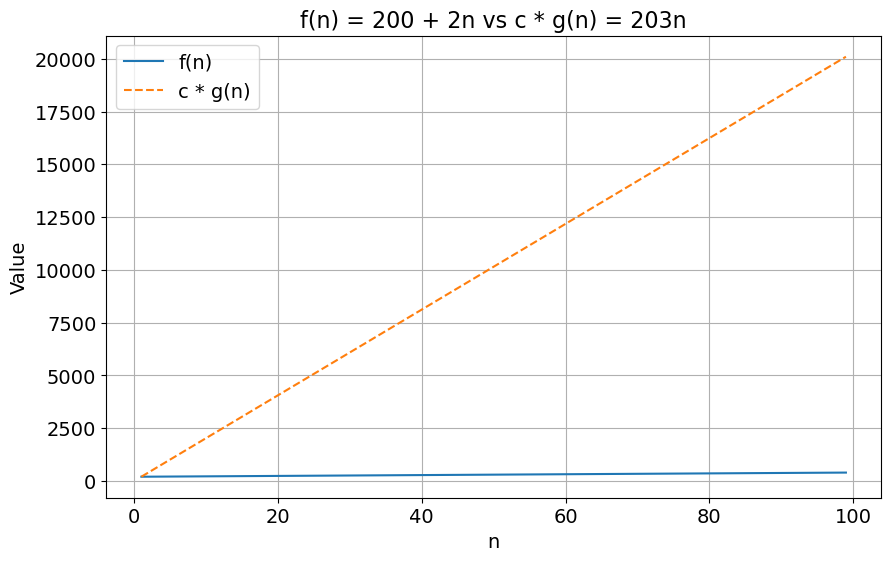

Let’s try to prove this intuition by proving the following:

With

The two sides of this inequality can be visualised in the chart below:

Code to generate plot

import matplotlib.pyplot as plt

import numpy as np

n = np.arange(1, 100)

f_n = 200 + 2 * n

g_n = 203 * n

plt.figure(figsize=(10, 6))

plt.plot(n, f_n, label='f(n)')

plt.plot(n, g_n, label='c * g(n)', linestyle='--')

plt.xlabel('n', fontsize=14)

plt.ylabel('Value', fontsize=14)

plt.title('f(n) = 200 + 2n vs c * g(n) = 203n', fontsize=16)

plt.legend(fontsize=14)

plt.xticks(fontsize=14)

plt.yticks(fontsize=14)

plt.grid(True)

plt.show()

Is

Let’s prove this using the formula

With

Job done!

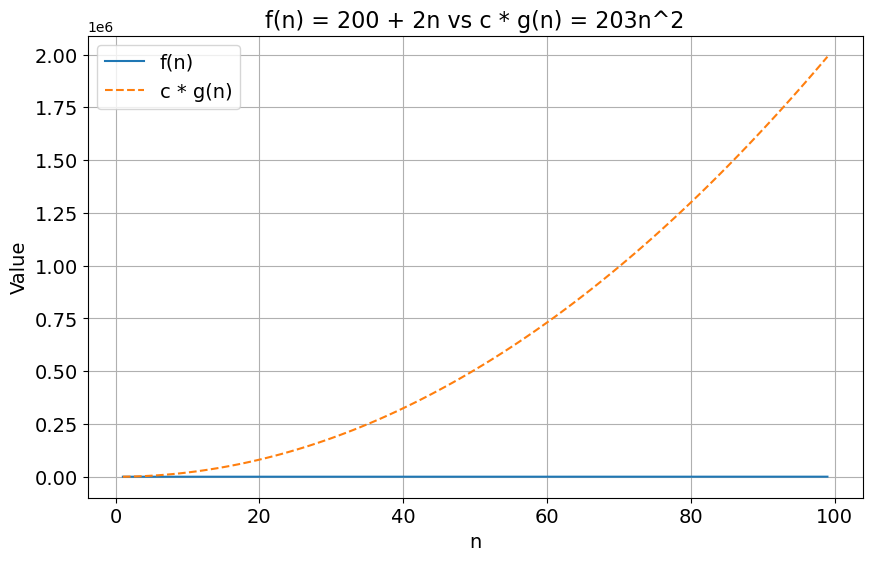

The difference of growth rate between

Code to generate plot

import matplotlib.pyplot as plt

import numpy as np

n = np.arange(1, 100)

f_n = 200 + 2 * n

g_n2 = 203 * n**2

plt.figure(figsize=(10, 6))

plt.plot(n, f_n, label='f(n)')

plt.plot(n, g_n2, label='c * g(n)', linestyle='--')

plt.xlabel('n', fontsize=14)

plt.ylabel('Value', fontsize=14)

plt.title('f(n) = 200 + 2n vs c * g(n) = 203n^2', fontsize=16)

plt.legend(fontsize=14)

plt.xticks(fontsize=14)

plt.yticks(fontsize=14)

plt.grid(True)

plt.show()Any function with upper bound

A function of complexity

A function of a given upper-bound complexity will also have all faster growing functions as upper-bound. As an example, a function of complexity

Note on

An algorithm runs in constant time or complexity

Expanding to Big-Omega (

Big-Omega (

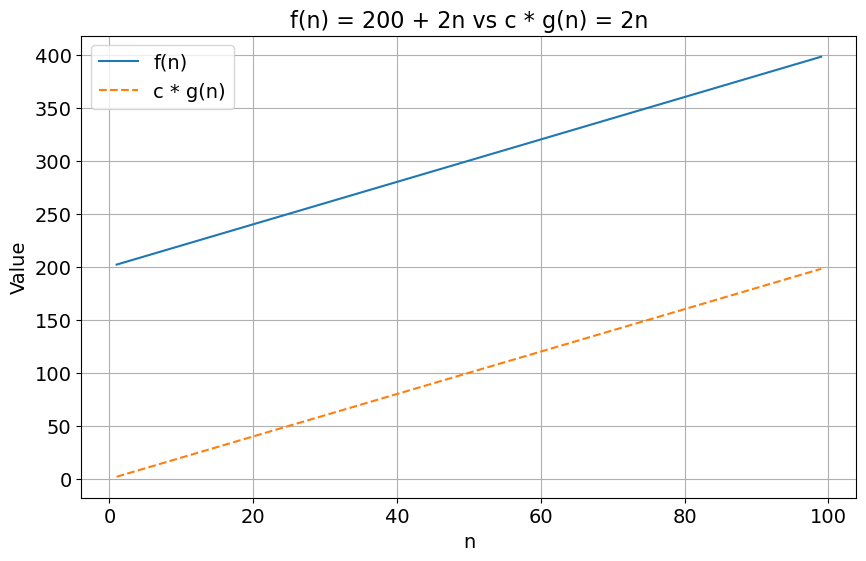

What would be the asymptotic lower bound of

Here again, the trick of identifying the fastest growing term (

Using

Code to generate plot

import matplotlib.pyplot as plt

import numpy as np

n = np.arange(1, 100)

f_n = 200 + 2 * n

g_omega_n = 2 * n

plt.figure(figsize=(10, 6))

plt.plot(n, f_n, label='f(n)')

plt.plot(n, g_omega_n, label='c * g(n)', linestyle='--')

plt.xlabel('n', fontsize=14)

plt.ylabel('Value', fontsize=14)

plt.title('f(n) = 200 + 2n vs c * g(n) = 2n', fontsize=16)

plt.legend(fontsize=14)

plt.xticks(fontsize=14)

plt.yticks(fontsize=14)

plt.grid(True)

plt.show()To test your understanding, try to answer the following questions:

- Is

- Is

Expand to see the answers

Answers

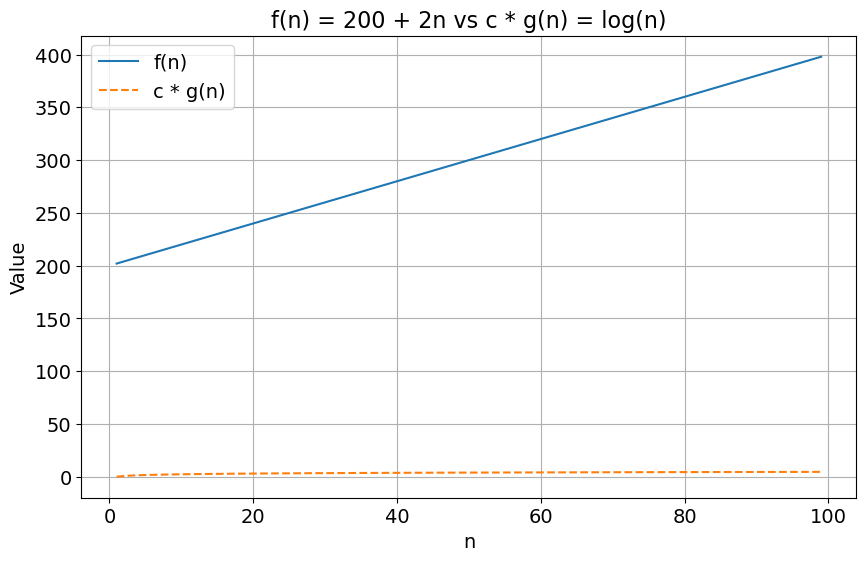

- No it is not. Because there is no constant

- Yes, as

Code to generate plot

import matplotlib.pyplot as plt

import numpy as np

n = np.arange(1, 100)

f_n = 200 + 2 * n

g_log_n = np.log(n)

plt.figure(figsize=(10, 6))

plt.plot(n, f_n, label='f(n)')

plt.plot(n, g_log_n, label='c * g(n)', linestyle='--')

plt.xlabel('n', fontsize=14)

plt.ylabel('Value', fontsize=14)

plt.title('f(n) = 200 + 2n vs c * g(n) = log(n)', fontsize=16)

plt.legend(fontsize=14)

plt.xticks(fontsize=14)

plt.yticks(fontsize=14)

plt.grid(True)

plt.show()A function with a lower-bound

Big-Theta (

When the function studied is mathematically defined, e.g.,

As an exercise, try proving this by finding constants

Hint: it is not harder than finding the upper- and lower-bound of the function.

By setting

To test your understanding, try answering the following questions:

- Is this

- Is it also

Expand to see the answers

Answers

- No, as there is no

- Similarly, there is no

Final Thoughts

Big-Oh (

Now that we understand the theory behind asymptotic notation, the following part of this series will apply this knowledge to study the complexity of Python code snippets.