What is the Complexity of Euclid’s Algorithm?

Subscribe to my newsletter to hear about my latest posts. No spam, I promise.

Euclid’s algorithm is one of the earliest algorithms ever recorded. It finds the Greatest Common Divisor or “GCD” between two integers

One simple way to find this GCD is to iterate over all integers between

Euclid’s algorithm elegantly leverages the fact that the GCD of two numbers is also the GCD of their difference to significantly reduce computation time.

Starting from our intuition, this must be better than the brute force method

Let

In the following update,

More generally,

Using the properties of the modulo operator, it can be proven that

Quickly validating this with a quick example, with

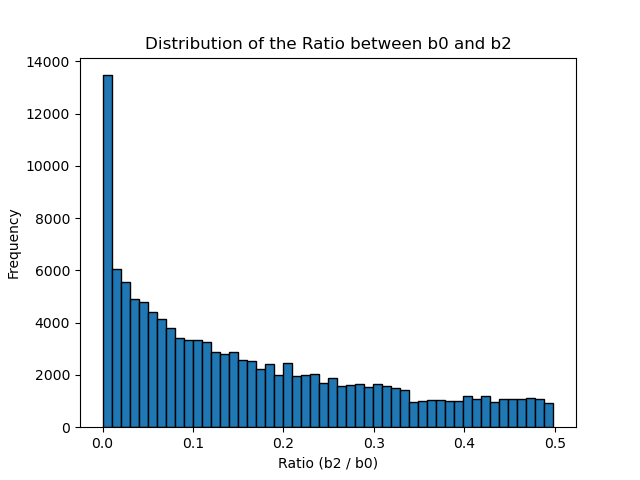

The proof is beyond the scope of this article (see Appendix for a proof), but this finding can be empirically validated by computing

Computer Simulation

Python code used to generate the chart

import matplotlib.pyplot as plt

def euclid_algorithm(a, b):

b0 = b

a1 = b

b1 = a % b

if b1 == 0:

return b0, None

a2 = b1

b2 = a1 % b1

return b0, b2

ratios = []

for a in range(1, 501):

for b in range(1, 501):

if a > b:

b0, b2 = euclid_algorithm(a, b)

if b2 is not None:

ratio = b2 / b0

ratios.append(ratio)

plt.hist(ratios, bins=50, edgecolor='black')

plt.xlabel('Ratio (b2 / b0)')

plt.ylabel('Frequency')

plt.title('Distribution of the Ratio between b0 and b2')

plt.show()Achieving Logarithmic Upper-Bound

Given the fact the upper-bound

Using our Computer Science intuition, the division by 2 points to a logarithmic decrease.

As

Putting this into mathematical form, we get:

Final Thoughts

In its beautiful simplicity, Euclid’s algorithm is a prime example of leveraging information about a problem to avoid exhaustive search. By exploiting the fact that two numbers’ Greatest Common Divisor is also the Greatest Common Divisor of their difference, this algorithm recursively reduces the problem - reaching a time complexity of

Appendix

Proving

Before going into further details, let’s remember how Euclid’s algorithm works with the example of

We can summarise the steps in the following table:

| Step Number | ||

|---|---|---|

| 0 | 72 | 54 |

| 1 | 54 | 18 |

| 2 | 18 | 0 |

The update rule of Euclid’s algorithm is as follows:

Now, how to prove that

Case 1:

In this case,

Case 2:

We observe the following:

If

This is due to the division rule of the quotient and remainder:

So, the remainder is

Substituting equation

From

Substituting back into equation

Thus, in both cases, we have shown that